Rendering Decomposed Recordings of Musical Performances

Florian Thalmann, Queen Mary University of London

Summary:

Using ontologies, signal processing, and semantic rendering techniques to provide malleable educational and analytical user experiences based on the original audio rather than synthesized material.

Description:

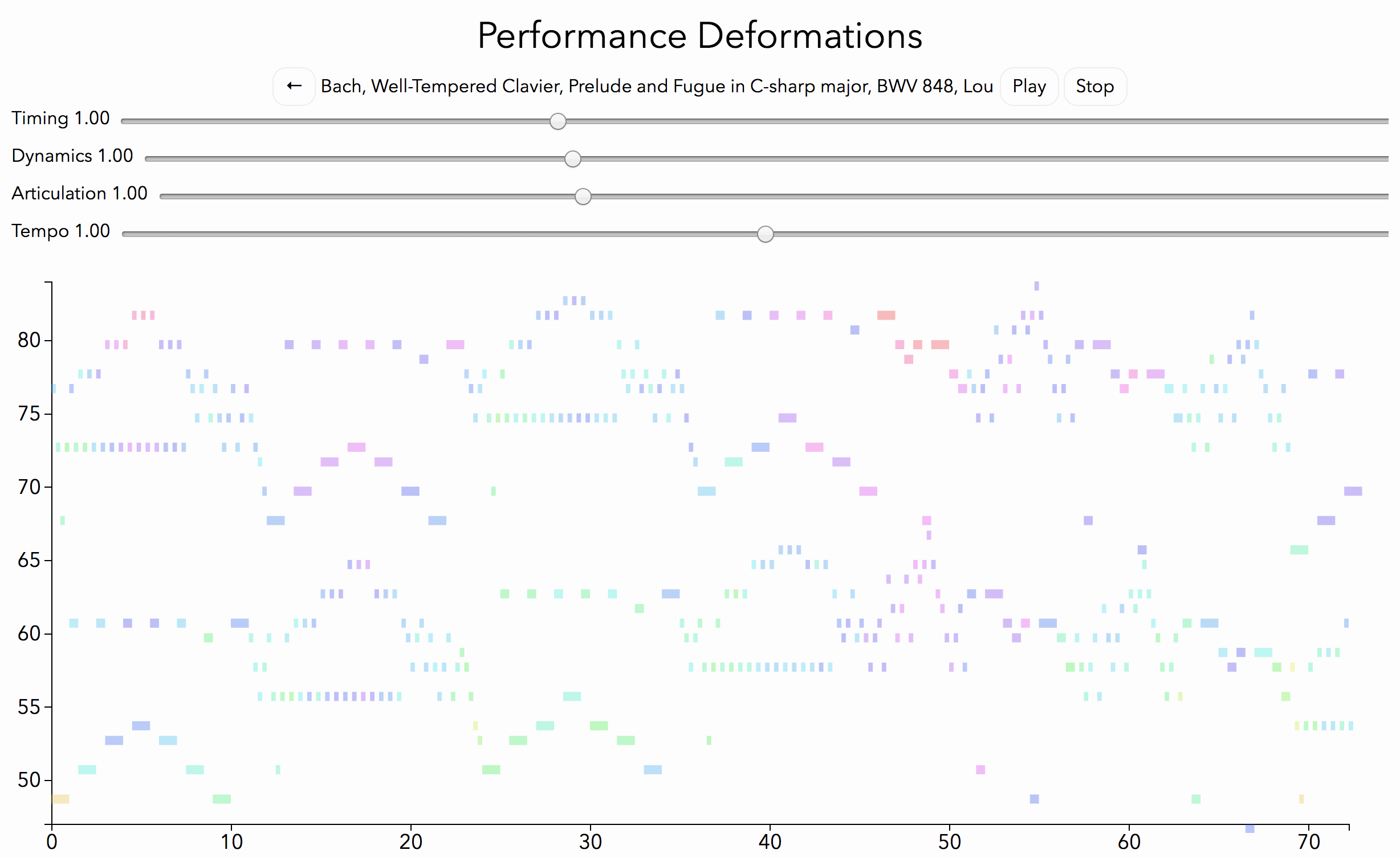

This demonstrator integrates score-informed source separation techniques with semantic playback techniques in order to aurally highlight certain musical aspects using dynamic emphasis, spatial localisation, temporal deformation, etc. It investigates the viability of such sonifications in the areas of education, music analysis, and semantic audio editing. The system decomposes a monaural audio recording into separate events using score-informed source separation techniques and prepares them for an interactive player that renders audio based on semantic information. Examples created so far include a spatialisation in the form of an immersive chroma helix which the listener can navigate in real-time using mobile sensor controls, as well as a tool that allows to exaggerate or flatten the idiosyncrasy of a performer’s style.

Website Link:

https://florianthalmann.github.io/performance-playground

Publications:

Florian Thalmann, Sebastian Ewert, Mark B. Sandler, and Geraint A. Wiggins. “Spatially Rendering Decomposed Recordings-Integrating Score-Informed Source Separation and Semantic Playback Technologies.” (2015).

Florian Thalmann, Sebastian Ewert, Geraint A. Wiggins, and Mark B. Sandler. “Exploring Musical Expression on the Web: Deforming, Exaggerating, and Blending Decomposed Recordings.” (2017).