Music Encoding and Linked Data (MELD)

David Weigl & Kevin Page, University of Oxford

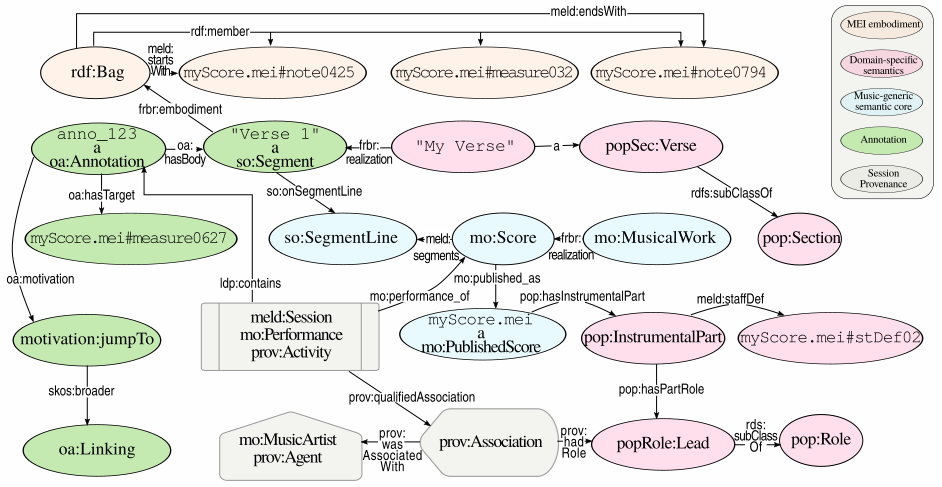

MELD is a framework that retrieves, distributes, and processes information addressing semantically distinguishable music elements.

Music is richly structured, and annotations can be used interlink musical content within that structure. Annotations must be able to specifically address elements within this structure if they are to be described or related. In multimedia information systems, such addressing has typically been achieved using timeline anchors, e.g., an offset along a reference recording in terms of milliseconds. Such timed offsets are not musically meaningful, and are of limited use when no reference recording is available. We can address part of this issue using the Music Encoding Initiative XML schema (MEI), which comprehensively expresses the classes, attributes, and data types required to encode a broad range of musical documents and structures.

The MELD framework and implementation architecture augments and extends MEI structures with semantic Web Annotations capable of addressing musically meaningful score sections. Through its use of Linked Data, MELD deploys knowledge structures expressing relationships unconstrained by boundaries of the encoding schema, musical sub-domain, or use case context, supporting retrieval of a wide range of music information. Within the framework, music-generic structures are linked with, but separable from, components expressing domain-specific entities associated with concrete instantiations of musical segments. By use of the flexible and extensible Web Annotation model, new kinds of annotations are easily incorporated through customisation of the MELD JavaScript web-client via drop-in rendering and interaction handlers. Annotation can seamlessly reference external data sources, and can in turn be referenced for external analysis, reuse, and repurposing in other contexts.