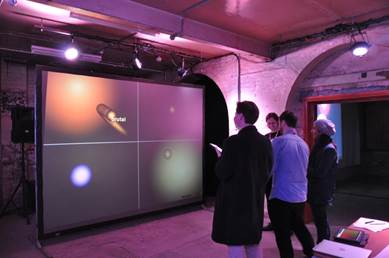

Moodplay Interactive Experience

Mathieu Barthet, Alo Allik, György Fazekas, Queen Mary University of London

Moodplay is a system that allows users to collectively control music and lighting effects to express desired emotions. We explore how affective computing, artificial intelligence, semantic web and synthesis can be combined to provide new personalised musical experiences.

- Moodplay allows users to collectively select music and control lighting effects conveying desired emotions.

- The system combines audio and semantic web technologies to provide personalised and interactive musical experiences.

- In order to vote for the music, participants can choose degree of energy and pleasantness (Arousal-Valence) using our Mood Conductor web app.

The audio client queries mood coordinates and track metadata from a triple store using a SPARQL endpoint.

The mood metadata is crowd-sourced from Last.FM tags and converted to Arousal-Valence coordinates using the Affective Circumplex Transform.

Download Moodplay poster here.