Author Archives: admin

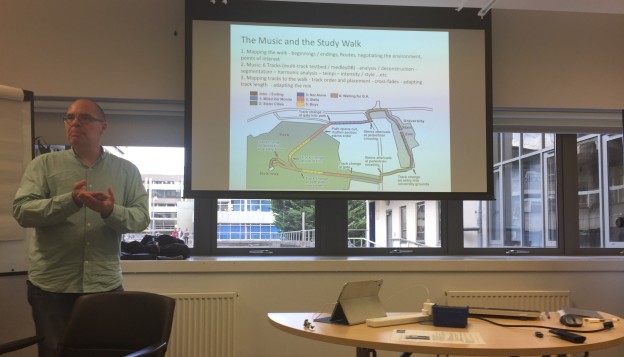

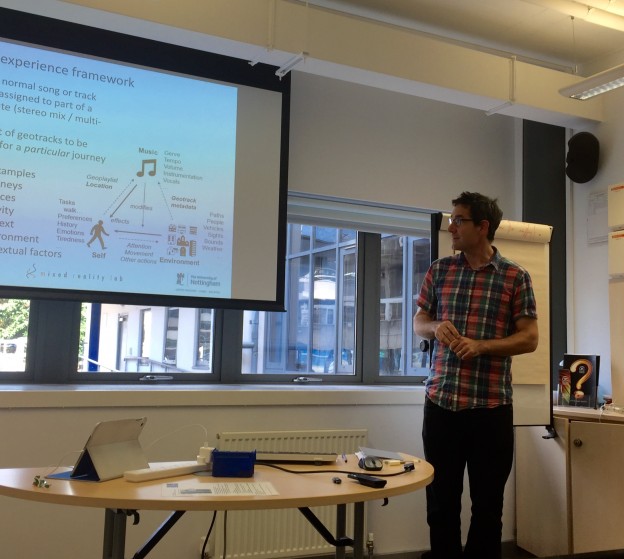

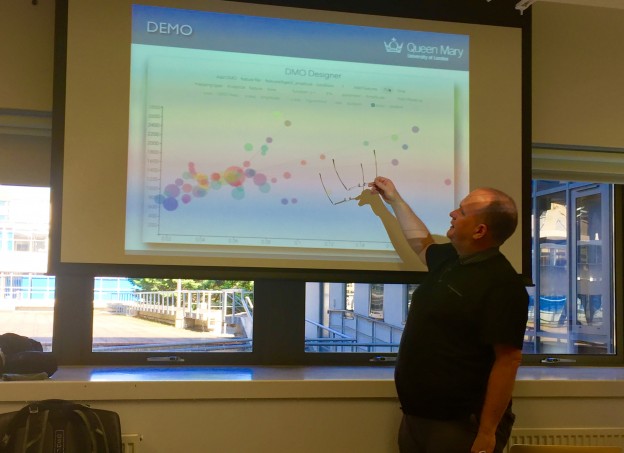

Photo 22: Oxford workshop 30 Sept 15

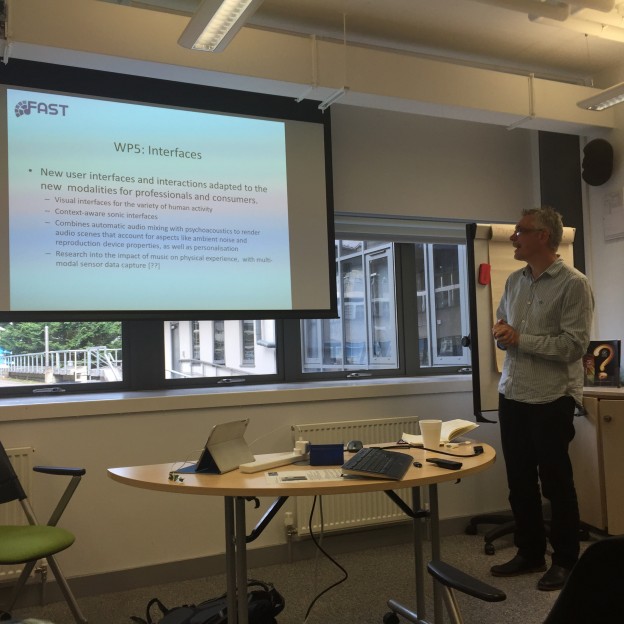

Photo 21: Oxford workshop 30 Sept 15

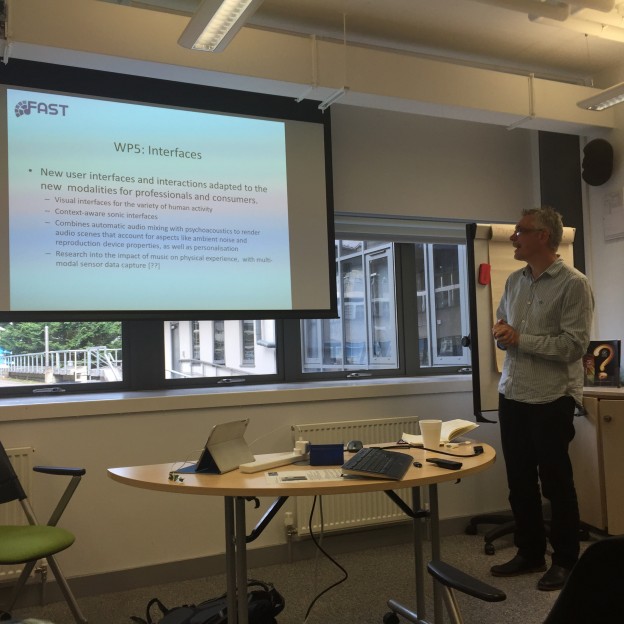

Photo 20: Oxford workshop 30 Sept 15

C4DM team present at Audio Mostly

At the recent Audio Mostly conference, the C4DM team presented their Moodplay experience project: “Moodplay: An Interactive Mood‐Based Musical Experience”. (Paper reference: Barthet, M., Fazekas, G, Allik, A., Sandler, M., Moodplay: An Interactive Mood‐Based Musical Experience, Proc. ACM Audio Mostly, Greece, 2015).

The full conference programme can be viewed here.

The theme of this year’s conference was “Sound, Semantics and Social Interaction”. As the organisers explained in their Call for papers: “the conference aims at confronting issues related to audio design, semantic processing and interaction that can be part of enhanced multimodal HMI in the social media landscape. It also attempts to investigate their role and involvement in the deployment of innovative web and multimedia semantic services as part of the transition to the Web3.0 era. A representative theme example that can be drawn here is that audio content production, music clips recording, editing, and sound design processes can be collaboratively applied within the social media networking context, while registering content shares and tagging with user feedback for semantically enhanced interaction. Moreover, besides technology-oriented approaches to the conference theme, submissions that refer to interdisciplinary work in this domain are strongly encouraged, including i) psychological research on the influence of auditory cues in shaping human multimodal perception, semantic processing, emotional affect, and social interaction within rich context environments, and ii) presentation of new practices of using sound as a medium for enhancing social interaction in new media artworks, and soundscapes.”

The team’s research is also one of the FAST Demonstrator projects. Previously, the team presented Moodplay at the Digital Shoreditch Festival in May 2015. A one minute video can be viewed below:

Humanising music technology

Kingston University, Monday 16 November 2015, 13:30 to 20:00 hrs (GMT)

This event focuses on the role of technology in our engagement with music and the intricate relationship between technology and creativity. The event will cover a broad range of approaches ranging from art music to the fan-based Chiptune phenomena. Participants will also have the opportunity to make music using a ‘hackable’ electronic instrument.

Please note that the D-Box workshop is now fully booked. There are still places left for the main event starting at 4 pm.

Programme

13:30 – Musical Hacking with Andrew McPherson (QMUL) and Alan Chamberlain (Nottingham University)

16:00 – Opening

16:10 – TaCEM Project with Michael Clark, Frédéric Dufeu and Peter Manning

17:00 – Short presentations

17:40 – Dinner break

19:00 – Concert with Torbjorn Hultmark and Matt Wright

A lecture-performance will be given by the turntablist Matt Wright and trumpeter/soprano-trombonist Torbjörn Hultmark, playing a mixture of purely acoustic sounds as well as sounds generated and processed electronically – from the subtly quiet and beautiful to the very powerful and highly energetic. A wide range of emotions, playing techniques and styles. Sometimes composed, sometimes improvised – always unpredictable

As a thread through this performance runs the very personal narrative of how these two musicians have individually, and now for the first time together, developed their work with music and technology. Their belief is that technology is part of humanity, and humanity part of the technological.

Further details and registration are available at:

https://www.eventbrite.co.uk/e/humanising-music-technology-tickets-18756264492

A flier can be downloaded here.

Report on the Linked Music Hackathon, 9 October 2015, London

by Kevin Page, Oxford e-Research Centre, University of Oxford

On Friday 9th October over 25 academics and developers gathered for a Linked Music Hackathon, the culminating event of the Semantic Linking of BBC Radio (SLoBR) project led by Dr Kevin Page from the Oxford e-Research Centre. The project, funded by the EPSRC Semantic Media Network, has applied Linked Data and Semantic Web technologies to combine cultural heritage and media industry sources for the benefit of academic and commercial research — and is research which will be developed and extended within the Fusing Audio and Semantic Technology (FAST) project.

On Friday 9th October over 25 academics and developers gathered for a Linked Music Hackathon, the culminating event of the Semantic Linking of BBC Radio (SLoBR) project led by Dr Kevin Page from the Oxford e-Research Centre. The project, funded by the EPSRC Semantic Media Network, has applied Linked Data and Semantic Web technologies to combine cultural heritage and media industry sources for the benefit of academic and commercial research — and is research which will be developed and extended within the Fusing Audio and Semantic Technology (FAST) project.

Attendees were able to use data produced by SLoBR and more than 20 other Linked Data music sources to produce new mashups prototyped and presented on the same day. A variety of hacks were shown at the end of the event with the winning project “Geobrowsing using RISM” chosen by popular vote. A Radio 1 team were also in attendance to interview attendees for the BBC’s “Make It Digital” campaign.

The hackathon was hosted at Goldsmiths, University of London, by colleagues from SLoBR and assisted by the Transforming Musicology project. Organisers and participants from the FAST project included David De Roure, Graham Klyne, Kevin Page, John Pybus, and David Weigl.

‘Listening in the Wild’, Queen Mary University of London

Research workshop: Listening in the Wild: Animal and Machine Audition in Multisource Environments, Queen Mary University of London, Friday 28th August, 10:30am-5pm

* How do animals recognise sounds in noisy multisource environments?

* How should machines recognise sounds in noisy multisource environments?

This workshop will bring together researchers in engineering disciplines (machine listening, signal processing, computer science) and biological disciplines (bioacoustics, ecology, perception and cognition), to discuss complementary perspectives on making sense of natural and everyday sound.

Registration details and further information available on the Eventbrite link here.

INVITED SPEAKERS:

Annamaria Mesaros (Tampere University of Technology, Finland)

Sound event detection in everyday environments

Alison Johnston (British Trust for Ornithology)

What proportion of birds do we detect? Variation in bird detectability by species, habitat and observer

Jordi Bonada (Universitat Pompeu Fabra, Barcelona)

Probabilistic-based synthesis of animal vocalizations

Sarah Angliss (composer, roboticist and sound historian, London)

Rob Lachlan (Queen Mary University of London)

Analysing the evolution of complex vocal traits: song learning precision and syntax in chaffinches

Alan McElligott (Queen Mary University of London)

Mammal vocalisations: from quality to emotions

Emmanouil Benetos (City University London)

Matrix factorization methods for environmental sound analysis

VENUE:

Arts Two Lecture Theatre,

Arts Two Building,

Queen Mary University of London,

Mile End Road,

London E1 4NS.

CONTACT:

Dan Stowell

Phone: +44 20 7882 7986

Email: dan.stowell@qmul.ac.uk

Carolan Guitar Acquires Siblings

by Steve Benford, Mixed Reality Lab, University of Nottingham

The Nottingham project team has been developing a guitar called the Carolan guitar or Carolan (named after the legendary composer Turlough O’Carolan, the last of the great blind Irish harpers, and an itinerant musician who roamed Ireland at the turn of the 18th century, composing and playing beautiful celtic tunes). Like it’s namesake, Carolan is a roving bard; a performer that passes from place to place, learning tunes, songs and stories as it goes and sharing them with the people it encounters along the way. This is possible because of a unique technology that hides digital codes within the decorative patterns adorning the instrument. These act somewhat like QR codes in the sense that you can point a phone or tablet at them to access or upload information via the Internet. Unlike QR codes, however, they are aesthetically beautiful and form a natural part of instrument’s decoration. This unusual and new technology enables our guitar to build and share a ‘digital footprint’ throughout its lifetime, but in a way that resonates with both the aesthetic of an acoustic guitar and the craft of traditional luthiery.

We are now bringing you some exciting news. The Carolan has acquired some siblings, although you might be hard press to spot the family resemblance. The Carolan team (http://carolanguitar.com/2014/07/25/team/) has been collaborating with Andrew McPherson and his group at the Augmented Instruments Laboratory at Queen Mary to explore how they might decorate other musical instruments with their life stories. This collaboration that is part of the FAST project is aimed at exploring new forms of digital music object.

Andrew’s lab creates new musical instruments and also augments traditional ones with sensors, new types of sound production and new ways of interacting. A nice example of Andrew’s previous work was to augment traditional piano keyboards with capacitance sensors so that pianists could naturally create vibratos, bends and other effects by moving their fingers on individual keys – an idea called TouchKeys. See: https://youtu.be/6fhmIqKHGs8

In a quite different vein, Andrew’s recent work has been exploring the design of ‘hackable instruments’, new forms of electronic instrument that while superficially simple, can be opened up and radically modified – ‘hacked’ – by musicians to create highly personalised instruments and performances. An early example is the D-Box, an electronic instrument that can easily be built from scratch and whose innards can be messed with in all sorts of ways without frying the circuitry. See: https://youtu.be/JOAO-EUtrGQ

This inherent hackability may lead to individual D-boxes becoming associated with rich histories of how they have been modified and used to create particular sounds and performances. This prompted the Carolan team to ask whether we might decorate D-Boxes with interactive patterns and so enable their owners to document and recall the unique history of hacks associated wih each instrument?

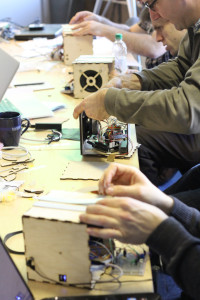

In January 2015, we hosted a FAST project workshop at the Mixed Reality Lab to build some D-Boxes and design some Artcodes (the new and more friendly name for Aestheticodes) that might be used to decorate them. First we assembled our D-Boxes …

Then we spent some time sketching Artcode decorations for them …

After the workshop, Adrian from the Carolan team designed a series of Artcode D-Box logos, a distinct one for each of six instruments. These were laser-etched and cut into the wooden sides of our D-boxes to create a family of instruments that can be scanned with a phone or tablet to read and update their individual blogs.

We are looking forward to releasing our D-Boxes into the wild to see where they might go, how they will be hacked and also how their interactive decorations might help maintain their histories.

Perhaps Carolan might even get to meet its new siblings? Anyone for a D-Box and acoustic guitar jam?