Friday, 1 November 2019, 1:30 pm – 5:30 pm

The Neuron pod, Whitechapel, Queen Mary University of London

The FAST partners from the Centre for Digital Music, Queen Mary, Oxford e-Research Centre, Oxford University and Mixed Reality Lab, Nottingham University will come together on the Friday 1 November to celebrate the outcomes of the five-year EPSRC funded FAST project coming to an end in December 2019. The event will consist of a mixture of talks, industry presentations and project demos. There will be plenty of time for socialising.

Final Programme

1:30 – 1:40 pm: Welcome by Mark Sandler, Queen Mary (FAST Principal Investigator)

1:40 – 2:10 pm: Academic & Principal Co-Investigator Talks

From Assisting Creativity to Human-Machine Co-Creation

Dave de Roure, Oxford

The UKRI Centre for Doctoral Training in Artificial Intelligence and Music

Simon Dixon, Queen Mary

The UKRI Centre for Doctoral Training in Artificial Intelligence and Music (AIM) will train a new generation of researchers who combine state-of-the-art ability in artificial intelligence (AI), machine learning and signal processing with cross- disciplinary sensibility to deliver groundbreaking original research and impact within the UK Creative Industries (CI) and cultural sector. Based at Queen Mary University of London (QMUL), the CDT will receive £6.2M UKRI, £2M from QMUL and over £1M from industry partners, who include key players in the music industry: Abbey Road Studios, Apple, Deezer, Music Tribe Brands, Roli, Spotify, Steinberg, Solid State Logic, Universal Music; as well as a range of innovative SMEs. AIM will run from 2019–2027 and will train five annual cohorts of 12-15 PhD students, starting in September 2019.

Challenges and Opportunities of Using Audio Semantics in Music Production

George Fazekas, Queen Mary

FXive: Transformative Innovations in Sound Design

Joshua Reiss, Queen Mary

Sound effects for games, film and TV are generally sourced using large databases of pre-recorded audio samples. Our alternative is procedural audio, where sounds are created using software algorithms. These are inflexible, limited and uncreative. Supported by the FAST grant, we have launched the high tech spin-out company FXive. FXive’s transformative innovation allows all sound effects to be created in real-time and with intuitive controls, without use of recorded samples, thus creating fully immersive auditory worlds. We aim to completely replace the reliance on sample libraries with our new approach that is more creative, more enjoyable, higher quality and more productive. Longer-term, it can lead to fully computer-generated sound design, just as Toy Story revolutionised the industry with fully computer generated animation.

Post-FAST Opportunities: Musical Knowledge, Inference and Formal Methods for Semantic Deployment, Geraint Wiggins (Queen Mary)

The FAST Blues, Steve Benford (Nottingham)

2:15 – 3:15 pm: FAST project demonstrations

D1. Climb! Interactive Archive (Chris Greenhalgh and Adrian Hazzard, Nottingham)

D2. rCALMA (Kevin Page, Oxford)

D3. Plunderphonics (Florian Thalmann, Queen Mary)

D4. SOFA (John Pybus and Graham Klyne, Oxford)

D5. The Grateful Dead Concert Explorer (Thomas Wilmering, Queen Mary)

D6. Moodplay (tbc) (Alo Allik, Queen Mary)

3:15 – 3:45 pm: Coffee and cakes

3:45 – 4:15 pm: Industry Talk

Music Informatics in Academia and Industry — Personal Insights

Matthias Mauch, Apple

When I started working in music informatics, industry involvement was minimal. I’m going to trace my own journey, from my PhD studies at Queen Mary, via other academic jobs, to my current work in the Music Science team at Apple Media Products. All stages have had their unique fascination, and I will share some insights on similarities and differences on the way.

4:15 – 5:00 pm: FAST project demos (cont’d)

D7. Carolan Guitar (Chris Greenhalgh and Adrian Hazzard, Nottingham)

D8. Folk Song Network Based on Different Similarity Measures (Cornelia Metzig, Queen Mary)

D9. Jam with Jamendo (Johann Pauwels, Queen Mary)

D10. Lohengrin Digital Companion (Kevin Page, Oxford)

D11. Notes and Chords (Ken O’Hanlon, Queen Mary)

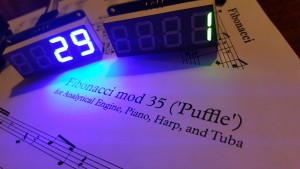

D12. Numbers into Notes (Dave de Roure, Oxford)

D13. Intelligent Music Production (David Moffat, Queen Mary)

5:15 pm: End

6:00 pm: Dinner at Pizza East, 56 Shoreditch High Street, E1 6JJ

Further information

FAST demonstrators:

https://www.semanticaudio.ac.uk/demonstrators/

FAST videos:

https://www.semanticaudio.ac.uk/vimeos/

FAST industry Day Abbey Road Studios:

https://www.semanticaudio.ac.uk/events/fast-industry-day/

Research in the FAST project and several other projects has demonstrated a new way to perform sound design. High quality, artistic sound effects can be achieved by the use of lightweight and versatile sound synthesis models. Such models do not rely on stored samples, and provide a rich range of sounds that can be shaped at the point of creation.

Research in the FAST project and several other projects has demonstrated a new way to perform sound design. High quality, artistic sound effects can be achieved by the use of lightweight and versatile sound synthesis models. Such models do not rely on stored samples, and provide a rich range of sounds that can be shaped at the point of creation.

I

I