Music’s changing fast: FAST is changing music. Showcasing the culmination of five years of digital music research, the FAST IMPACt project (Fusing Audio and Semantic Technologies for Intelligent Music Production and Consumption) led by Queen Mary University of London hosted an invite only industry day at Abbey Road Studios on Thursday 25 October, 2 – 8 pm. Presented by Professor Mark Sandler, Director of the Centre for Digital Music at Queen Mary, the event showcased to artists, journalists and industry professionals the next generation technologies that will shape the music industry – from production to consumption.

FAST is looking at how new technologies can positively disrupt the recorded music industry. Research from across the project was presented to the audience, with work from partners at the University Nottingham and the University of Oxford presented alongside that from Queen Mary. The aim being that by the end of the FAST Industry day, people would gain some idea how AI and the Semantic Web can couple with Signal Processing to overturn conventional ways to produce and consume music. Along the way, industry attendees were able to preview some cool and interesting new ideas, apps and technology that the FAST team showcased.

One hundred and twenty (120) attendees were treated to an afternoon and evening of talks demonstrations, the Climb! performance, and an expert panel discussion with Jon Eaves (The Rattle), Paul Sanders (state51), Peter Langley (Origin UK), Tracy Redhead (award-winning musician, composer and interactive producer, University of Newcastle, Australia), Maria Kallionpää (composer and pianist, Hong Kong Baptist University) and Mark d’Inverno (Goldsmiths) who chaired the panel. Rivka Gottlieb, harpist and music therapist, performed some musical pieces based on her collaboration with PI David de Roure and the project ‘Numbers into Notes’, Oxford e-Research Centre, throughout the day. Oxford e-Research Centre, throughout the day. Other speakers included George Fazekas who outlined the Audio Commons Initiative, Tracy Redhead and Florian Thalmann who presented their work on the semantic player technologies and Ben White who spoke about the Open Music Archive project (exploring the intersection betweeen art, music and archives).

The FAST Industry Day was opened by Lord Tim Clement-Jones (Chair of Council, Queen Mary University of London) and was compered by Professor Mark d’Inverno (Professor of Computing at Goldsmiths College, London).

Below are some highlights:

Carolan Guitar: Connecting Digital to the Physical – The Carolan Guitar tells its own story. Play the guitar, contribute to its history, scan its decorative patterns and discover its story. Carolan uses a unique visual marker technology that enables the physical instrument to link to the places it’s been, the people who’ve played it and the songs it’s sung, and deep learning techniques to better event detection. https://carolanguitar.com

FAST DJ – Fast DJ is a web-based automatic DJ system and plugin that can be embedded into any website. It generates transitions between any pair of successive songs and uses machine learning to adapt to the user’s taste via simple interactive decisions.

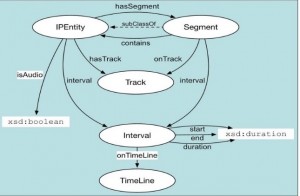

Grateful Dead Concert Explorer – A Web service for the exploration of recordings of Grateful Dead concerts, drawing its information from various Web sources. It demonstrates how Semantic Audio and Linked Data technologies can produce an improved user experience for browsing and exploring music collections. See Thomas Wilmering explaining more about the Grateful Dead Concert explorer: https://vimeo.com/297974486

Jam with Jamendo – Jam with Jamendo brings music learners and unsigned artists together by recommending suitable songs as new and varied practice material. In this web app, users are presented with a list of songs based on their selection of chords. They can then play along with the chord transcriptions or use the audio as backing tracks for solos and improvisations. Using AI-generated transcriptions makes it trivial to grow the underlying music catalogue without human effort. See Johan Pauwels explaining more about Jam with Jamendo: https://vimeo.com/297981584

MusicLynx – a web platform for music discovery that collects information and reveals connections between artists from a range of online sources. The information is used to build a network that users can explore to discover new artists and how they are linked together.

The SOFA Ontological Fragment Assembler – enables the combination of musical fragments – Digital Music Objects, or DMOs – into compositions, using semantic annotations to suggest compatible choices.

Numbers into Notes – experiments in algorithmic composition and the relationship between humans, machines, algorithms and creativity. See David de Roure explaining more about the research: https://vimeo.com/297989936

rCALMA Environment for Live Music Data Science – a big data visualisation of key in the Live Music Archive using Linked Data to combine programmes and audio feature analysis. See David Weigl talking about rCALMA: https://vimeo.com/297970119

Climb! Performance Archive – Climb! is a non-linear composition for Disklavier piano and electronics. This web-based archive creates a richly indexed and navigable archive of every performance of the work, allowing audiences and performers to engage with the work in new ways.

The FAST project brings together labs from three UK’s top Universities: Queen Mary¹s Centre for Digital Music, University of Nottingham¹s Mixed Reality Lab and the University of Oxford¹s e-Research Centre.

More about the FAST Industry Day:

https://www.semanticaudio.ac.uk/events/fast-industry-day

Full list of FAST demonstrators:

https://www.semanticaudio.ac.uk/demonstrators/

News item on Audio Commons demonstrators at the FAST industry day:

https://www.audiocommons.org/2018/10/23/abbey-road-industry.html

Research in the FAST project and several other projects has demonstrated a new way to perform sound design. High quality, artistic sound effects can be achieved by the use of lightweight and versatile sound synthesis models. Such models do not rely on stored samples, and provide a rich range of sounds that can be shaped at the point of creation.

Research in the FAST project and several other projects has demonstrated a new way to perform sound design. High quality, artistic sound effects can be achieved by the use of lightweight and versatile sound synthesis models. Such models do not rely on stored samples, and provide a rich range of sounds that can be shaped at the point of creation.

I

I