Show Results by Category

Fusing Audio and Semantic Technologies

For Intelligent Music Production and Consumption

For Intelligent Music Production and Consumption

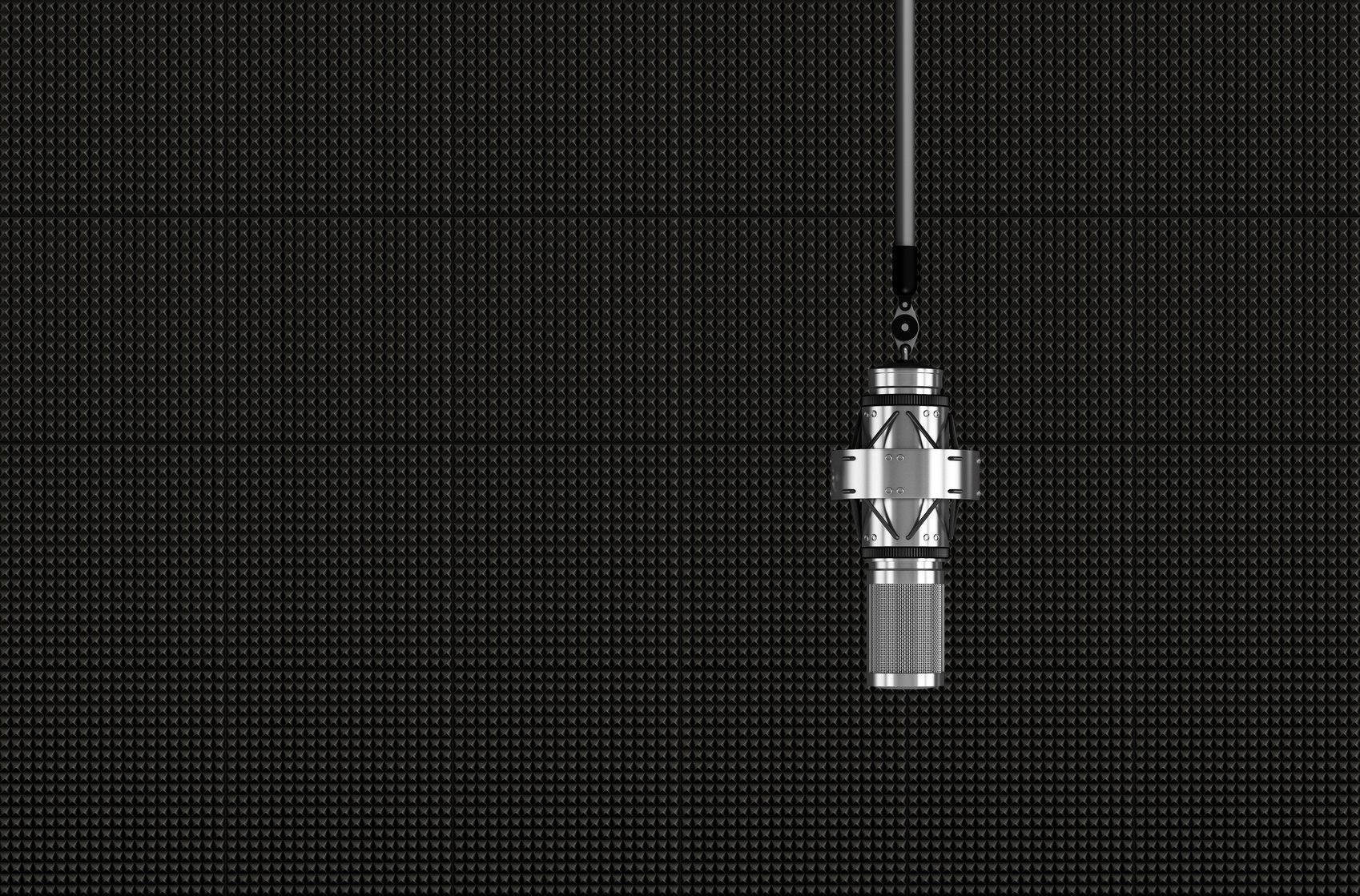

Dedicated to the life and works of Alan Blumiein