by Sean McGrath, Mixed Reality Lab, University of Nottingham

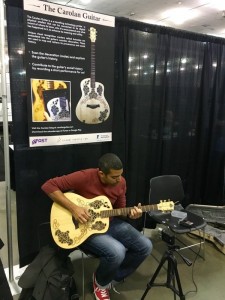

The project, The Rough Mile, is funded through the EPSRC’s FAST project (Fusing Semantic and Audio Technologies for Intelligent Music Production and Consumption, EPSRC EP/L019981/1) through the Mixed Reality Lab at the University of Nottingham. The Rough Mile is a two-part location-based audio walk that combines principles of immersive theatre with novel audio technologies, giving people the opportunity to give each other performance-based gifts based on digital music.

Listening to recorded music is generally construed as a passive act, yet it can be a passionately felt element of individual identity as well as a powerful mechanism for deepening social bonds. We see an enormous performative potential in the intensely meaningful act of listening to and sharing digital music, understood through the lens of intermedial performance. Our primary research method is exploring the dramaturgical and scenographic potentials of site-specific theatre in relation to music. Specific contexts of listening can make powerful affective connections between the music, the listener, and the multitude of memories and emotions that may be triggered by that music. These site-specific approaches are augmented by performance-based examinations of walking practices, especially Heddon and Turner’s (2012) feminist interrogation of the dérive, as music often shapes a person’s journey as much as it does any experience they arrive at.

For this project, as part of the EPSRC-funded FAST Programme Grant, we are creating a system through which people can devise ‘musical experiences’ based at a particular location or route, which they then share with others. They craft an immersive and intermedial experience drawing on music, narration, imagery, movement, and engagement with the particularities of each location, motivated by and imbued with the personal meanings and memories of both the creator of the experience and its recipient. We believe that this fluid engagement with digital media, technology, identity, and place will provide insights into the relationships between commercial music, the deeply personal significance that such music holds for individuals, the performativity inherent in the shared act of listening, and site-based intermedial performance.

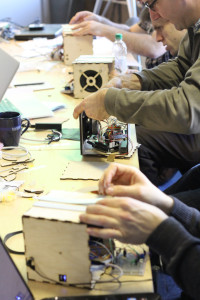

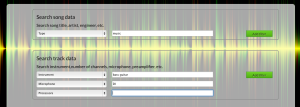

We are developing a two-part, location-based audio walk performance for pairs of friends using Professor Chris Greenhalgh’s Daoplayer. They are drawn into a fictional world that prompts them to consider pieces of music that have personal significance in relation to their friend. Through the performance they choose songs and share narratives behind them, which are then combined to form a new audio walk performance, this time as a gift for their friend to experience. By focusing on the twin roles of memory and imagination in the audience experience, the work turns performance practice into a means of engaging more fully with digital technologies such as personal photos and music that people invest with profound personal significance.

The first part is immersive and intermedial audio walk drawing on music, narration, imagery, movement, and engagement with the particularities of the location in central Nottingham. The second part has participants retrace their original route, this time listening to the songs chosen for them by their friend, contextualised by snippets of audio from their friend’s verbal contributions. The musical gift is motivated by and imbued with the personal meanings and memories of both the giver and the receiver. We believe that this fluid engagement with digital media, technology, identity, and place will provide insights into the relationships between commercial music, the deeply personal significance that such music holds for individuals, location, movement, and performance.